How we build evals for AI Agents

Growth and revenue teams lose hours every week to research, qualification, and writing tasks that feel simple but require thorough research, deep comprehension of both product and prospect, and non-obvious judgement calls. Our mission at Unify is to create an always-on system that automates the manual, repetitive work while making growing revenue observable, scalable, and repeatable.

To enable this automation, we have started by building the foundation of our always-on system, which is composed of a number of AI features; both single-turn AI and multi-turn agents.

Single Turn AI

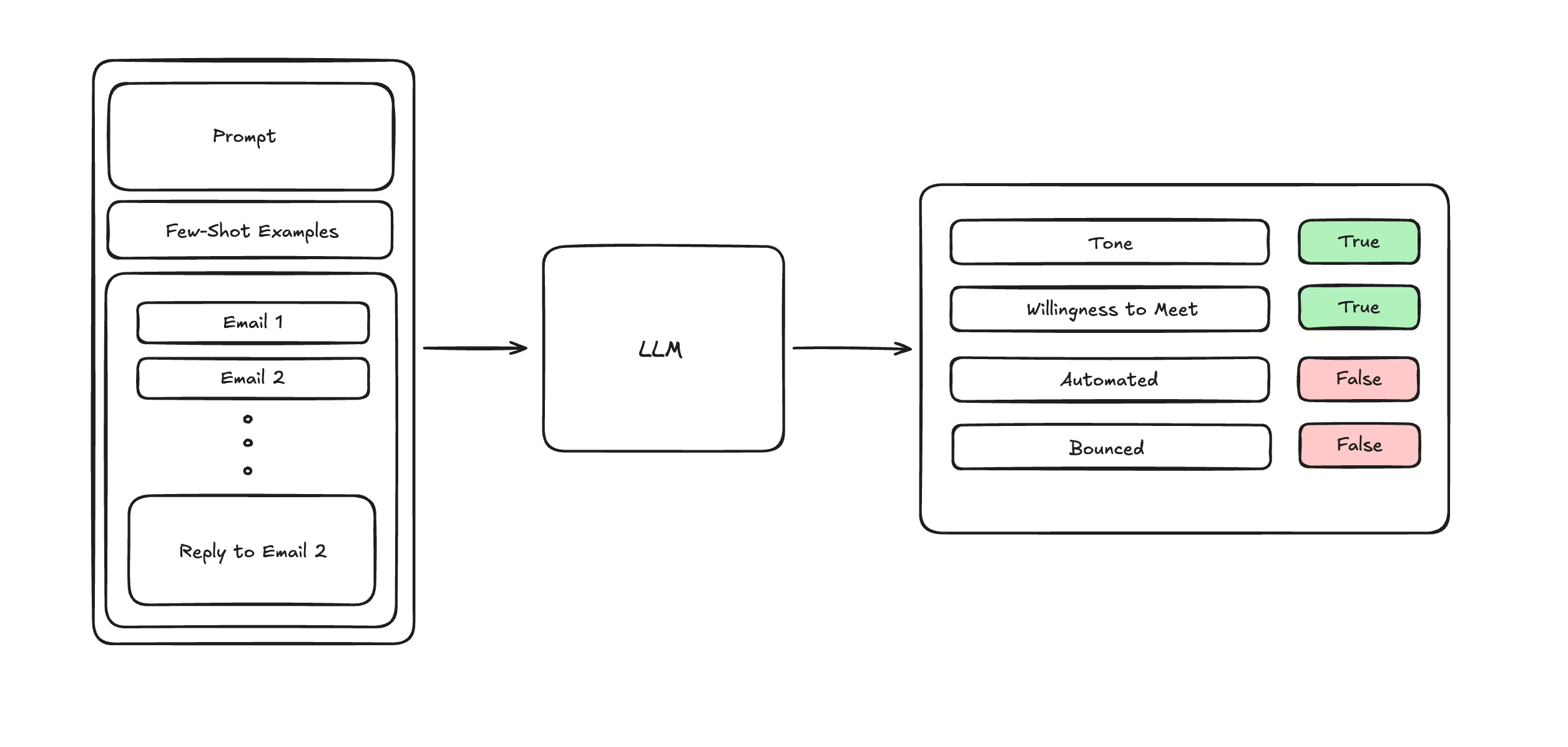

Single turn tasks are atomic; given a prompt and context, we make one forward pass through the model and return the final output. This mirrors traditional ML inference where a model takes an input and produces an evaluable result.

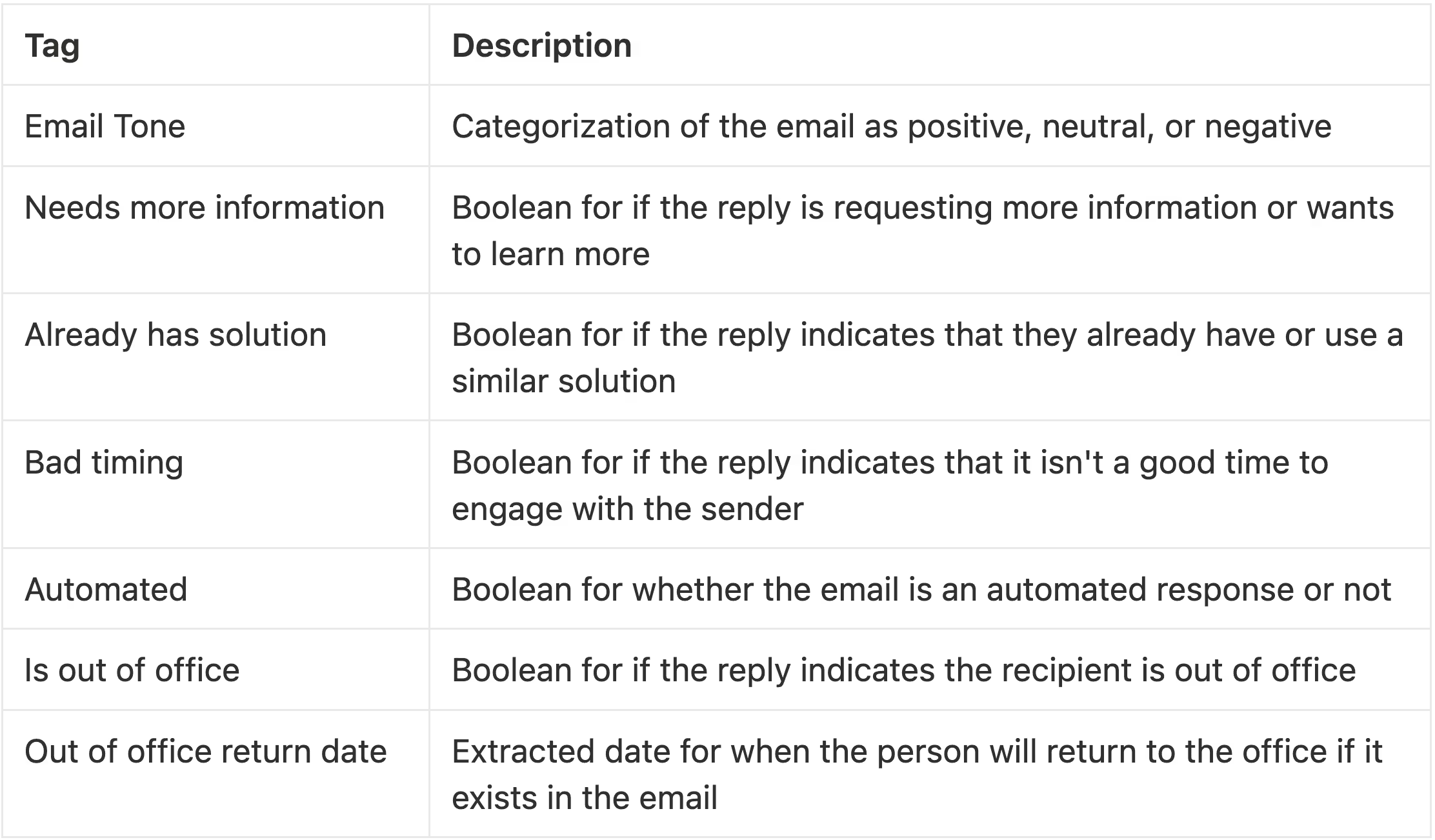

Reply Classification for Unify Sequences is an example of single turn AI. Sequences are Unify’s built-in engine for multi-step, multi-channel outbound campaigns for emails phone calls, and manual tasks. Reply Classification is the pair feature that provides analytics into the performance of email sequences by using LLMs to evaluate and extract attributes on a reply to a sequence. The schema of the data we extract from every email reply to a sequence includes the following:

These tags capture the signals that we use to judge the success of a sequence, but they aren’t mutually exclusive. An email might sound positive yet still say it’s “not the right time,” or read as neutral while asking for more information. Because multiple labels can coexist on a single reply, it is a multi-label problem and the overlap between labels makes evaluation trickier than a simple single-class accuracy score. A false positive on a field like “is out of office” could pause outbound sequences, while an incorrect classification of tone can skew perception of how well the sequence is performing.

To start evaluating models on this task, we constructed a dataset of real email chains and used human labeling to create a ground truth. We then measured the LLM’s performance by using traditional classification accuracy, where all predicted tags are scored against the ground truth tags for a given email. We quickly found that overall accuracy was misleading, as the LLM could have a higher correct classification rate for whether an email was automated or not, but a lower classification rate for whether the email indicated that the sender already has a solution. Since the importance of different classes to a user varies significantly, we needed to adjust this metric to weigh the different classes by their importance.

We adjusted this evaluation criteria to measure overall accuracy, weighted accuracy, and accuracy just on tone. Weighted accuracy places more emphasis on tags that Unify can take action on such as “needs more information”, “is out of office”, “already has solution” and deprioritized tags that were near 100% accuracy. Tone accuracy isolated the performance of sentiment analysis. Breaking down accuracy into these metrics immediately exposed gaps that classical accuracy hid, enabling a clearer signal for model iteration.

Multi-Turn Agents

Introducing AI Agents was a paradigm shift for AI product development at Unify.

Our AI Agents are research focused; users can provide questions about a person or company, and guidance on any information that would be helpful to conducting this research, and the agent will search and utilize tools until it can answer all of the questions. Our agents are armed with a number of tools like the ability to search the internet, scrape webpages, retrieve HTML from a webpage, access datasets for person level data, and use a browser. AI Agents have enabled a variety of research tasks such as scanning the news for recent crime, reading financial reports, or even figuring out what restaurant gift card to send a prospect based on their location.

Agents are multi-turn systems and are not deterministic. Given a objective they will work until they reach a conclusion. This makes evaluating performance much more difficult as success is not binary and there are now stochastic paths, intermediate decisions and reasoning, external data sources (tools), state management, and compound objectives.

The obvious first evaluations to run with multi turn systems are the same accuracy metrics from single turn AI. It requires creating datasets of tasks that have measurable and structured results with booleans, numbers, or enums. The agent can be run against these tasks, and accuracy is measured on the correctness of the outputs against the true outputs. While this is a decent first pass at evaluating the capability of a system to complete the task, if the resulting accuracy is not exceeding the threshold for production ready performance, it doesn’t provide enough information to understand where things are going wrong.

With Agents there are numerous failure modes that are not captured by single turn metrics:

- Incorrect trajectory: Agents plan their own paths and iterate through different tools and nodes until they think they have completed the objective. There is not a singular best path for every task, but there are certainly wrong paths and bad paths. Accuracy captures the wrong paths, but does not provide understanding for the rest; if a task was completed with perfect accuracy, but the agent took ten times as long to complete as before, repeated work, or got stuck in loops, the system is not working optimally.

- Poor tool choice & poor tool output: Choosing the correct tools for a given task is critical to surfacing the right information to continue to make research decisions. Different tasks have optimal tool choices and tool orders, for example in general research it may be better to fan out and then deep dive, using Google first, then scraping specific results from the initial search. Choosing a provider for internet search for our Agent highlighted the need to be able to evaluate tool outputs as well. We needed to assess internet coverage, ease of use by the Agent, and availability. Accuracy may be a proxy of tool choice and its outputs, but does not clearly identify if the root cause of poor performance is model or tool related.

- Inconsistent conclusions: Since agent runs can have different trajectories, different tool results, and different conclusions per run, consistency across runs needs to be measured. Accuracy on single turn AI is relatively stable, with lower standard deviation since inputs and outputs are largely controllable, but when trajectories are not fixed and the same data is not guaranteed, the standard deviation across repetitions of an evaluation are extremely important to understanding stability.

These failure modes are not comprehensive but underscore why different evaluations and metrics need to be created to measure system performance and to aid in identifying underlying issues.

To counter these challenges, a new set of evaluations and metrics needs to be developed to track changes over time, monitor for regressions, and enable rapid iterations on architecture, tools, and models within a system. The metrics we now use for multi turn systems include:

- Plan quality (human + llm-as-a-judge): Since our research agents explicitly plan before conducting research, we needed a way to evaluate the quality of the plans generated by different models. To do this we employ both human evals and a judge LLM that uses a rubric based off of characteristics that we use ourselves to spot check generated plans. The judge LLM looks for thoroughness, precision in instructions, adherence to guidance provided by the user, and penalizes for incorrect assumptions made without conducting research.

- Tool Choice & Tool Diversity: Scoring tool choice is done on a per task basis, in which specific research tasks require at least one specific tool to be used in order for research to be successfully completed. Diversity is measured as the number of unique tools employed to complete a research task, this enables us to understand the distribution of tool calls across different tasks by an agent configuration.

- Efficiency: Scoring efficiency is done simply through the number of steps taken to solve a research task where a step is one of the core phases available for that agent (ex. plan phase, reflect phase, or tool call phase).

- Reliability: We measure reliability by looking at the standard deviation of the answers across 5 runs of the same evaluation.

- Generalizability: This is a meta evaluation that is constructed through running an Agent through a number of different research task evaluations to see how well a specific configuration performs.

These metrics have helped uncover a number of suboptimal outcomes in testing such as:

- Failure to compare dates, we found that even recent models struggled to determine future or past dates given unstructured data containing dates and the current date. This was uncovered by the reliability score and an evaluation data set focused on extracting online data from a specific time range.

- Missing tool calls, we found cases where the agent was not calling any tools and completing its research. Efficiency was abnormally high and tool diversity was low which indicated that the agent was skipping steps to get to a conclusion. We found the agent using a combination of pre-training knowledge and inputs from the user to come to a conclusion without verification.

- Producing inconsistent results for the same research task (if a task is re-run N times, is the outcome consistent between runs). This was uncovered through the reliability metric with high standard deviations on evaluation metrics between runs.

- Incorrect utilization of new tools for the wrong use cases. This was uncovered through the tool choice metric which identified that specific tools were not being invoked properly over the course of an agent path.

To develop a comprehensive set of evals, we identified high frequency research use cases, and also common failure states that we had seen in developing these features. By combining the use cases with these metrics, we now have a way to both qualitatively and quantitatively evaluate the models and systems we build to ensure that common information retrieval, generation, and synthesis tasks are done accurately and effectively.

Evals

Here’s an overview of the evals that we have built to test our Agents on:

- Firmographics & Technographics: Firmographics measures the ability for an agent to accurately retrieve information about headquarters, headcount, industry, geographic presence, funding, and legal structure for a company online. Technographics measures the ability of the agent to identify and retrieve technologies and software that a person or company uses (i.e. SOC2, Auth0, etc).

- Account Qualification: Measures the ability for an agent to identify ways to find qualifying characteristics with internet research and general tool usage. We use a combination of accuracy with human-labeled data for deterministic outputs and LLM-as-a-judge to evaluate more abstract outputs like recent news and social engagement. The categories below are individual evals that we wrap up into a single metric for overall account qualification performance.

- Growth Signals (Fundraise, Headcount)

- Hiring Signals / Recent Hires / Org Changes

- Recent News (Partnerships, Rebrands, Launches)

- Social Engagement (employee or company level chatter)

- Entity Recognition (ability to understand and match entities to research subjects)

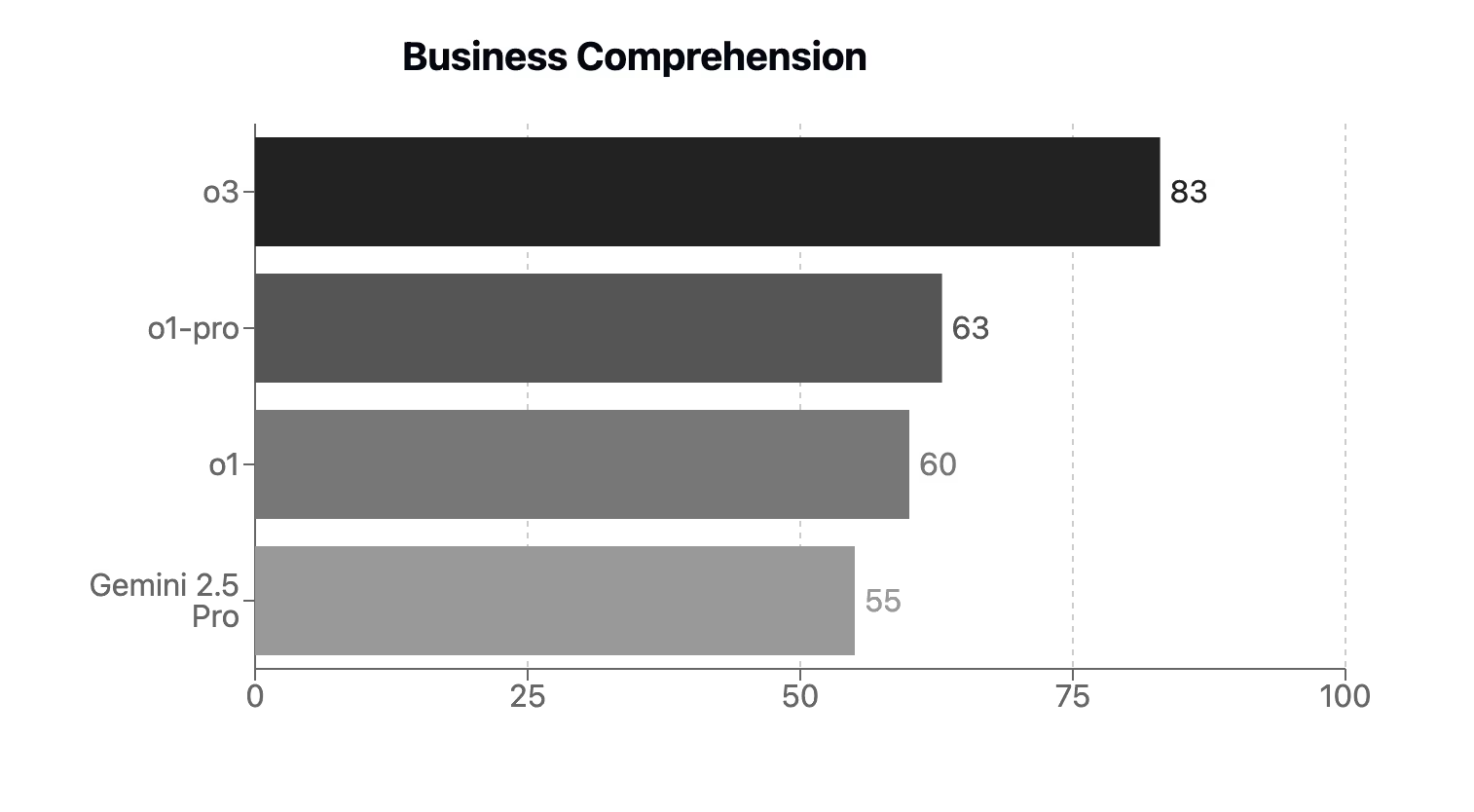

- Business Understanding: Generating context about a prospective customer as well as your own business is essential to identifying the highest priority leads and ideal customer profiles. We have found that this task is only completed accurately by reasoning agents. We use an LLM-as-a-judge and a human evaluation to check the reasoning agent’s ability to identify and understand the core components and signals about a given business.

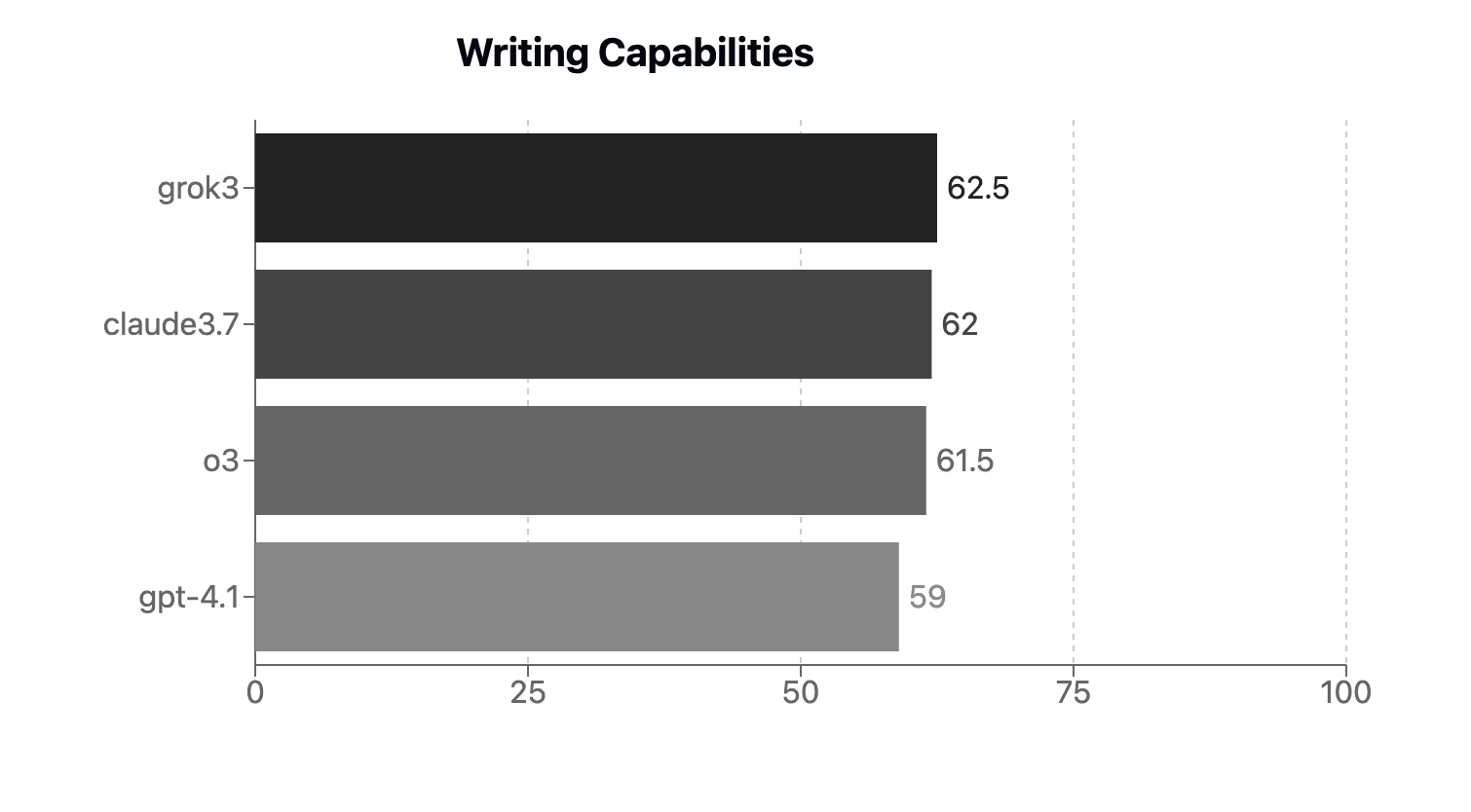

- Writing Capability: Drafting first touch emails or copy for prospects using relevant context from research is a core writing tasks that is used in Unify that we have model level evals for. We use an LLM-as-a-judge alongside human feedback with rubrics for copy to evaluate the quality of generations.

The charts below highlight some of the interesting findings from benchmarking new models and evaluating agent systems. We have decided to show a different grouping of models for each eval as we have found models tend to spike in different capabilities, and generally a one size fits all approach is suboptimal. For example, we recently replaced o1 with gpt-4.1 in our agent as we saw improvements in plan quality and as a result tool use alongside reductions in both cost and latency.

The results shown on the Account Qualification and Firmographic / Technographic evaluations are scored as a composite of accuracy, tool efficiency, and plan quality across all of the sub tasks, capturing how well a given agent can generalize across many different research tasks.

Our writing and business comprehension evaluations are more difficult to measure quantitatively and cannot be captured by accuracy, and as a result use both human evaluations and llm-as-a-judge. By defining a rubric to score generations against, a judge model is able to assess the quality of a generation on a set of scales. For business comprehension, our rubric includes specificity (how detailed is the completed business specification by the agent), thoroughness (can the judge llm identify any gaps in the analysis), and alignment (a common sense check by the judge to determine if the analysis aligns with the information that was provided and expected outputs). The judge model grades each of these axes on a scale of 1 to 10, compiling a total score as the scaled sum of individual scores. Previously, o1-pro was the best performing model at generating these reports, but now o3 has significantly outperformed all existing models at this task.

Writing capability is something that we are still exploring as we’ve found models when prompted in the same way, formed a consensus around the style and quality of output. We also use an llm-as-a-judge to evaluate the writing using axes such as complexity, formality, tone, personality, sentence structure, concision, and vocabulary, and scoring each attribute on a scale of 1 to 10, to form a total score.

While these evals have given us a lot of signal in where we can improve our Agents, they are still not exhaustive and we are continually working on finding new ways to pressure test our systems on tooling, model reasoning and inference capabilities, as customers continue to come up with unique and imaginative ways to leverage our AI systems. We treat evals as a cornerstone to improving our AI features and Agent capabilities, and we will need to build new evals and metrics on an ongoing basis to ensure that our systems continue to improve and can be measured in uncharted research tasks.

If you are interesting building systems that apply frontier models to unstructured problems like go-to-market, join our team.

%20(1).png)

%20(1).avif)

.avif)

.avif)

%20(2).avif)

.avif)