High leverage engineering in an early startup

From day one at Unify, we set out to create the platform that will power the next generation of sales, marketing, and growth teams. We knew that in order to pull it off, we were going to need to build a lot of product.

In a world with countless uninspiring sales products and data providers, our hypothesis was that a single platform that unified the ecosystem with native AI capabilities and best-in-class features, design, and UX would be a game changer.

Two years into the journey, we’ve executed on that vision and scaled our product to hundreds of customers. In 2024, we successfully grew our revenue and product usage by nearly 25x, and we achieved that with a lean team of fewer than ten engineers.

There are some core technology choices and shared patterns our engineering team has embraced over the past two years of product development that have given us enormous leverage. Some of these were early bets, and some emerged through trial-and-error over the course of the journey. These choices shape our product velocity and quality, and we believe the same principles apply to many founding teams at ambitious early-stage companies.

Why we use Typescript for (almost) everything

We chose Typescript as our primary language for both frontend and backend development. On the frontend, our web app is powered by React + Vite. On the backend, our services run on Node.js in Kubernetes.

What this unlocks is the ability to share types seamlessly across the stack. Strong end-to-end type checking without room for inconsistencies across language boundaries results in a meaningfully easier development experience and a more reliable product for users.

Our Postgres data models are defined using Prisma, which provides high-quality Typescript types and client methods for database operations. We’ve found that in many cases, Prisma eliminates the need to manually create and maintain API layers between business logic and the database. This makes it easier for the type checker to identify problems at build time, not at runtime.

We take advantage of the rich ecosystem Typescript offers on both the frontend and the backend. We’re big fans of xyflow, Tiptap, and D3 as tools for building lovely interfaces. The Fastify web server framework combined with Zod and TypeSpec for schema validation and API definitions has been really successful for our microservices. And expressive utilities like TS-Pattern make it a breeze to write safe, easy-to-read code.

It’s worth mentioning that we also use Python for ML projects, such as AI agents. While there is an ecosystem for ML in Typescript, the best frameworks and libraries are still found in Python. We apply a modern Python development approach that takes advantage of type checking, schema-based validation, and async support. Our Python stack integrates tightly with our monorepo build system thanks to the excellent Astral tooling.

Monorepo development

Our team uses a monorepo to develop all frontend and backend services. This strategy has unlocked enormous velocity and developer experience gains by enabling our team to develop and deploy changes with fewer restrictions imposed by breaking changes and tedious deploy cycles.

In a polyrepo setup, code lives and interacts across multiple repositories—often split by service or team—mandating strict backward compatibility at every boundary. Breaking changes require a careful deprecation process, slowing development when changes span repositories. In a fast-paced product development environment, it happens every day.

With a monorepo, if you need to change something, you change it everywhere all at once. All of the code is built, tested, and deployed together. In the case of problems, rollbacks (with Vercel and Kubernetes deployments) can be simultaneously performed across the frontend and backend to a consistent known-working version of the codebase.

Developer experience also massively benefits from the monorepo. In contrast with split repositories, where tooling, patterns, and structure diverge over time, in a monorepo these aspects remain consistent across projects. Everyone on the team understands the layout of our services, how to build them, and how to run them. A common sentiment from new team members is that once you’ve ramped up on one service, you’re ready to start working on any of them.

Sharing service patterns

Managing a codebase with more microservices than team members is daunting. Distributed systems are complex; even more so when working with unfamiliar code. To tackle this challenge, we’ve placed an emphasis on sharing strong patterns between services.

Asynchronous communication

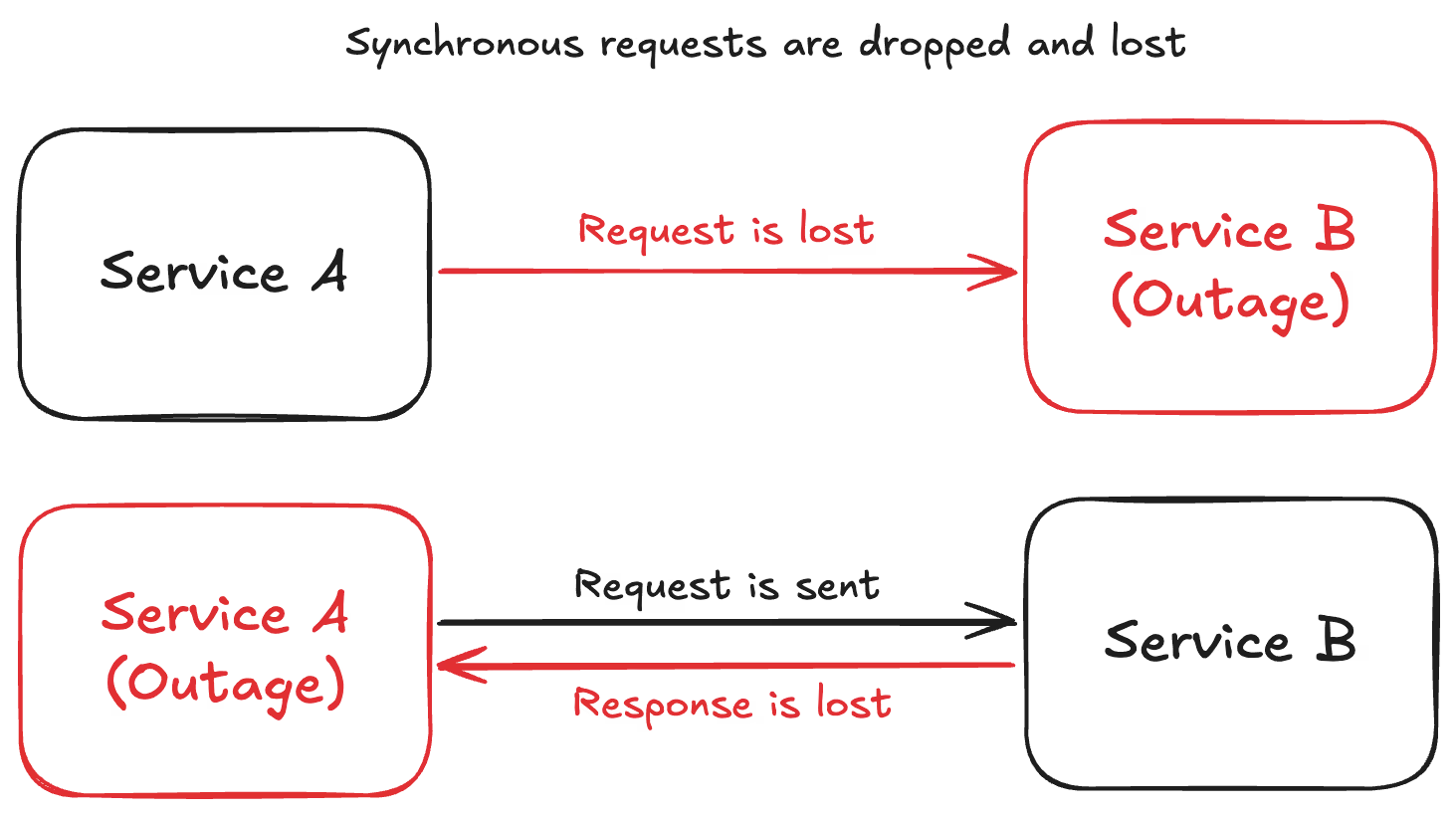

At scale, failures are inevitable—whether due to third-party outages, network instability, database issues, or bugs. How a system endures and recovers from failure is essential. Do services lose data, drop requests, or require manual intervention? Or do they replay failed requests and resume normal operation on their own?

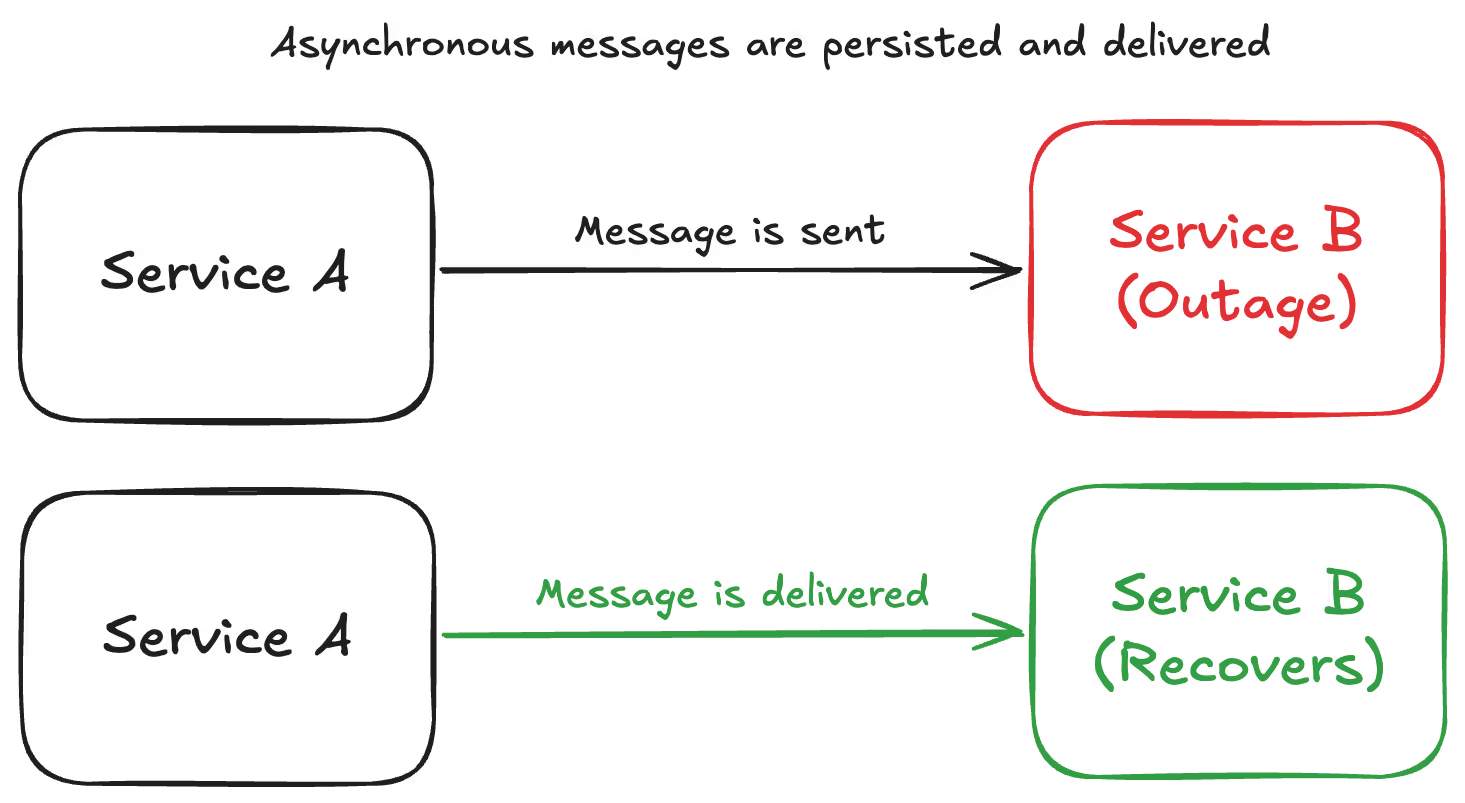

This characteristic is called resilience. In a microservice architecture, one of the most critical patterns to achieving resilience is that communication be asynchronous whenever possible. In this approach, distributed services communicate by passing messages that are persisted in between the sender and the receiver. Message delivery and processing is guaranteed by a reliable intermediary.

We use asynchronous communication wherever possible and practical. The technologies we love using the most for this are Temporal and Apache Pulsar. Temporal is a durable execution framework with general-purpose support for async tasks and schedules. Pulsar provides message queueing and natively supports pub/sub, retries, dead-letter topics, and more. New engineers who learn just these two technologies will understand how essentially all of our inter-service communication works.

These technologies and patterns provide us with strong guarantees that downtime in services will not result in data loss, because messages simply accumulate and then drain once the service recovers. In the worst case that there's a logical bug, we can replay messages from any point in time. We obtain this resilience across all of our services by simply maintaining consistent patterns, which as a small team is incredibly valuable.

Consistent architecture

Every service at Unify is composed of only three types of components (aside from the shared database):

- HTTP server: A synchronous HTTP API with JSON requests and responses. Used when serving frontend requests or when synchronous service-to-service communication is strictly required.

- Pulsar consumer: A process that consumes messages from one or more Apache Pulsar topics. We chose Pulsar for its rich feature set and flexible support for different usage patterns, and we use it for all asynchronous processing that happens at high volumes (such as ingesting data in real-time).

- Temporal worker: A process that picks up and completes Temporal workflows and activities for the service. Temporal is our swiss army knife that serves a long tail of use cases such as long-running processes initiated in the UI, scheduled jobs, ad hoc tasks, and more.

Different services use different combinations of these component types. Some services require only a single Temporal worker deployment, whereas other services require multiple server, worker, and consumer deployment groups.

In day-to-day development, these patterns empower developers to move between services quickly and with ease. We’ve made substantial investments into the developer experience and tooling around each component type, and we have monitoring and alerting templates for each to make it easy to spin up new services with comprehensive observability.

Why we deployed Kubernetes during week 1

Like many companies, we use Kubernetes. Unlike many startups, however, we set up our Kubernetes cluster the first week of starting development on the product.

The advice often given to startups is to avoid complex infrastructure like Kubernetes and to start with simpler solutions that will provide room to focus on other priorities. But our early team already had experience working with Kubernetes, and we knew the product we wanted to create would benefit greatly from being built on solid footing. This early bet paid dividends as we scaled the product over the next two years.

Kubernetes’ features around high-availability deployments, networking, service configuration, and more have proven invaluable as our volume of users and features has grown. Integrations with AWS load balancers, Route 53, Datadog, and more simplify many of our most critical infrastructure considerations when developing applications.

Moreover, everyone on our team knows how to inspect Kubernetes deployments, troubleshoot issues, and create manifests for new services. Choosing Kubernetes as the developer platform for our team has provided a great blend of flexibility and consistency that everyone enjoys using.

How we think about continuous delivery

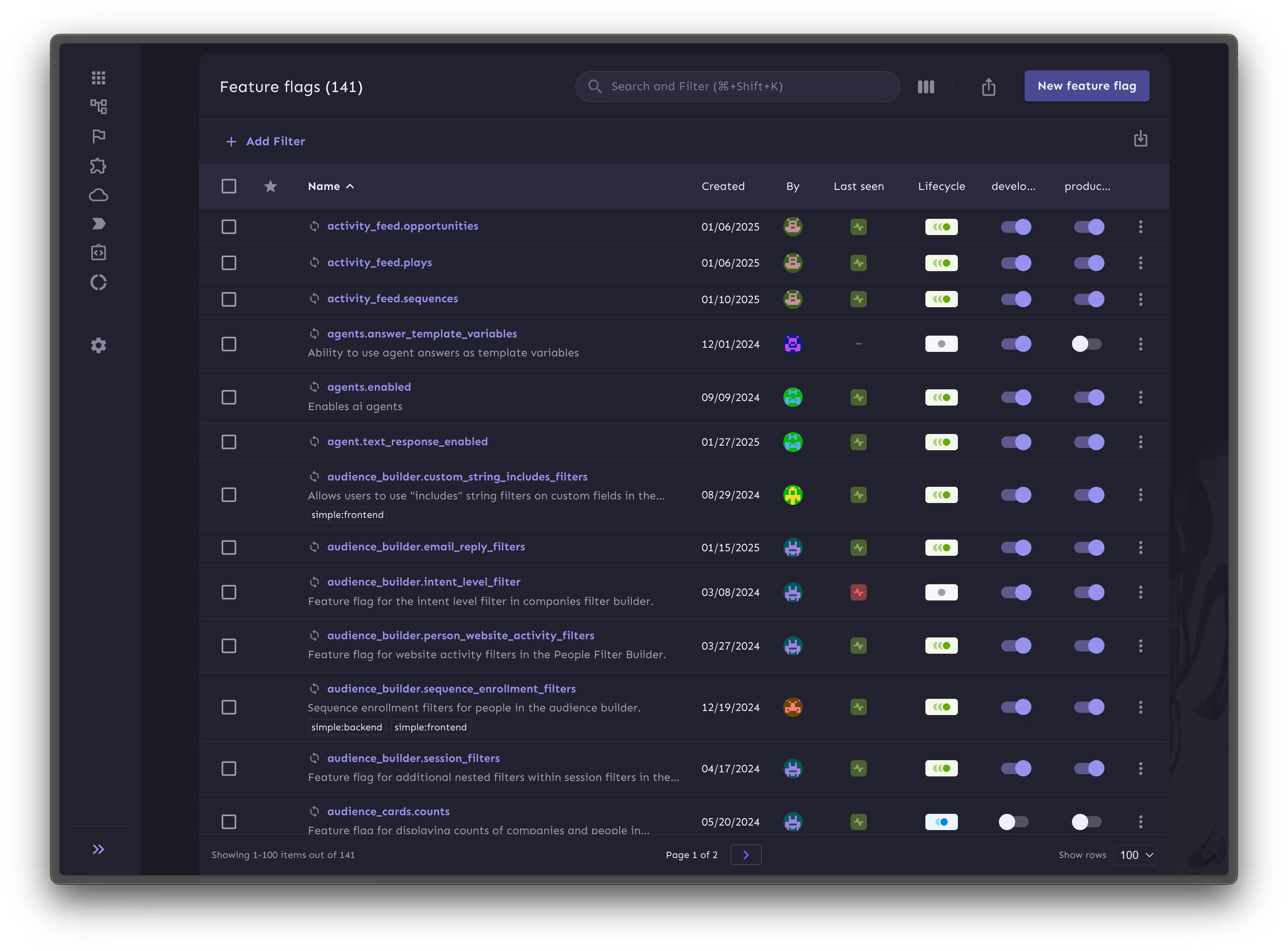

Rapid product and engineering velocity requires fast deployment cycles. However, we had to think carefully about how to achieve that while maintaining a stable and bug-free production environment. The most important step we took was to use feature flags to decouple deployments from releases.

Our production environment is deployed off the main branch multiple times per day; anyone on the team can run a deployment to get the latest code out. The only requirement is that deployments be monitored as they go out. This poses a challenge, however, because it’s hard to have full context on every single change going out in a deploy.

To solve this, we ensure all significant changes are feature flagged when the code goes out. Once deployed, feature developers can release their features to select users for testing and feedback before performing a wider rollout. Often, we release features for internal dogfooding first—our growth and sales team uses our product extensively.

If a feature isn’t working as intended, it can be turned off instantly without reverting code or rolling back any deployments. This eliminates much of the risk associated with releasing code changes. We see velocity and risk as inverses: as long as we make code changes safe, we can move fast.

What's next?

Maintaining a fast development pace on a growing product requires obtaining leverage wherever possible. For our team, carefully chosen technologies and patterns have come to feel like superpowers when building new features and scaling to new heights.

We love embracing the challenges that come with building exceptional products—if you do too, join our team. Stay tuned for future posts where we’ll dive into the most interesting technical challenges we’ve tackled so far and things we’ve learned along the way!

%20(1).png)

%20(1).avif)

.avif)

.avif)

%20(2).avif)

.avif)